3D Imaging & Computer Vision

I've been developing 3D rendering algorithms since the early 70s, began creating computer vision solutions in the mid-80s, scalable media cloud/servers during the 90s, 3D mobile apps in the 00s, and 3D embedded/IoT media systems from the 10s into the present.My passion is building teams that create 3D imaging and computer vision solutions that benefit people. I've managed software and hardware teams up to 50 engineers, while remaining a hands-on cross-platform full-stack developer.

Portfolio

- MedChroma: 3D Medical Imaging Service

- Naked Labs: 3D Body Scanning System

- Bellus3D: 3D Head/Face Scanning System

- Computer Vision: my journey to Apple

- SKUR: Lidar 3D Facilities Scanning System

- Patents: and other innovations

- Portfolio: how I built it

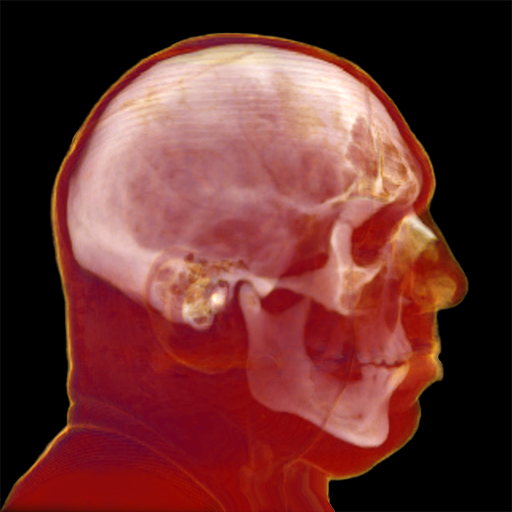

Rendering CT Scans

I mapped the scan's CT density to body tissues (bones, cartilage, muscle, fat, skin) assigning color/transparency to each tissue type.

WebGL Translucency Rendering

This weekend project was to test tissue color/alpha mapping and evaluate WebGL performance. I rendered CT scan slices as texturemap planes (billboards), resulting in the expected gap band artifacts you can see along the side view.To support translucency, the slices must be rendered in back-to-front order. I avoided sorting slices by using model-view orientation to determine which slice is to the right of the eye view, then using a reversed slice list on that side.

drag to rotate the WebGL view

My follow-up project was a ray casting iPhone app to display CT and MRI scans.

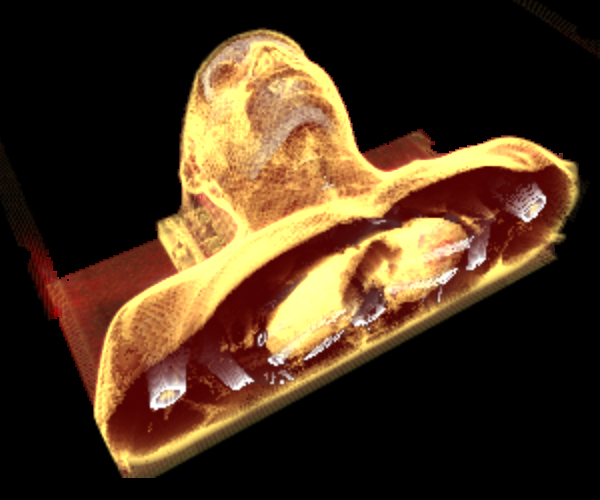

Optimized Ray Casting

My innovations for this project were to create a new ray casting algorithm that works both back-to-front and front-to-back for optimized translucency, develop tissue segmentation classifiers, and to generate a normal map from tissue segments.I also created a fast algorithm for determining how many rows and columns are in texturemaps, to support user-supplied images with no metadata.

The app renders 2D grayscale CT/MRI scans into 3D color in real-time. Through real-time segmentation, I'm able to filter/display selected body materials. Here's a video of the app:

this as a kid's education app.

When I saw the results on an iPad, I realized it might have diagnostic applications - so I decided to build an online service: MedChroma.

MedChroma

MedChroma is a proof-of-concept online service I built to allow people to preview/upload DICOM files, preprocess the results, then download them to my RayCaster mobile app.DICOM files are generally several gigs in size - I wanted to parse them in the browser so that users could: preview the content, pick what they wanted to upload, then repackage the data - reducing the upload to just a few K of data.

This video demonstrates dropping a DICOM folder onto the service, parsing and extracting CT/MRI scans, scrubbing in 2D, then previewing in 3D before uploading to the service.

Most of the system is functionally complete; I still need to build a gallery, scale it out, and do some aesthetic redesign.

I put this project on hold when I received an offer to join Naked Labs as the founding engineer and VP of SW Engineering - building a 3D body scanner.

Naked Labs

My first year and a half as VP of SW Engineering at Naked Labs, I was the sole software engineer creating all our Cloud, IoT, and Mobile apps. In addition, we had a great computer vision guy in Austria; everyone one else worked on hardware or marketing.We created a 3D body scanner that had 3 pairs of IR depth sensors embedded in a mirror, and a scale that turned you 380 degrees while scanning.

The results were 3D models of your body with volume, height, weight, body-fat index, and circumference measurements of various parts of your body.

My Cloud/IoT innovation was to create a single Node/JS framework used by both the Cloud (AWS) and IoT (Linux), using only C/C++ and Javascript for the the entire system, allowing me to move engineers to work on any part of our system.

The following page has videos of the Naked Labs iOS and Android apps I built.

Naked Labs OpenGL Mobile Apps

These are iOS (Obj-C) and Android (Java/JNI) apps I designed and implemented using OpenGL, WiFI, Bluetooth, discovery protocols to drive our scanner, capture 3D models, measurements, and display the results. I designed/implemented OpenGL shaders that I shared between iOS and Android.

|

iOS App

|

Android Prototype

|

The following page covers my work at Bellus3D building a 3D face scanner.

Bellus3D Head & Face Scanner

I joined Bellus3D as Director of Engineering (hardware and software), with reports in Silicon Valley and Taiwan. I designed and implemented our IoT system, and all the networking/discovery on our android 3D depth sensor product.We built a system that included an array of up to 7 of our WiFi 3D sensors which captured IR/Color/Depth streams, pre-processed depth, streamed frames to our IoT device, then further processed/merged the data into a photo-realistic 3D head/face model - for uploading to the Cloud and distributing to web apps and mobile devices.

My innovations included an algorithm for automatically identifying the position of a depth sensor within our array of 3D cameras.

The following reviews some of my Color Model work that led to me developing an ethnically-neutral Skin Detector.

Computer Vision: Color Models

As VP of Engineering at Fabrik, I used a variation of my cHL color model (IHC) and a feature-matching algorithm I developed to score/sort images in our visual search IoT device.

Prior to joining Apple, I used my custom color models to dramatically improve speed performance and reduce false positives in my face detection algorithms.

OpenGL & OpenCV

As CTO of Appscio in 2007, I began working with OpenCV and face detection algorithms - correlating voice/face recognition to produce highly accurate person recognition.Contracting to Blue Planet (Hawaii) in 2009, I developed a system to classify images (indoor/outdoor, plants/sky/water, people/scene) and score/sort each group by a rules-based definition of best images for each category.

Also in 2009, Apple invited me in to interview for a face recognition role.

The following is a presentation of the iPhone demo I gave during my interview.

Face Detection & Recognition

For my interview at Apple, I demo'd the iPhone app I had written over the previous weekend, resulting in an offer as Sr. Computer Vision Researcher to drive face detection/recognition tech across the company.My algorithms ran faster and more accurately than the face/feature detector then in use by Apple.

I can't demo my work at Apple, but I can talk about what I did there.

Apple: Sr Computer Vision Engineer

iPhoto App

Reporting to the iPhoto engineering manager, I improved the speed performance and accuracy of face detection/recognition and face groupings.Unify Our Face Recognition Framework

Reporting to the director for desktop products (iPhoto/Aperture/iMovie), I created a unified face recognition framework for all our apps.Strategic Initiatives

Reporting to our VP of Engineering, I was the technical liaison between our researchers, engineers, and marketing.I established a definition of "face" for various use cases (security, consumer photos, artwork, etc). Then I built a DB and repository of images with truth tables of faces by these categories, then created SDKs for hardware and software apps to record and compare net improvement/degradations in speed performance and accuracy between algorithms.

SKUR: Lidar 3D Facilities Scanning

Through my company Graphcomp, I completed a 10 month contract to SKUR as a Senior Computer Vision engineer.SKUR was building a system that compared 3D CAD models of large facilities, airports, refineries - against lidar scans of those sites in progress.

My task was to develop a way to correlate the CAD models with lidar point clouds so that we could track construction progress, deviations, missing/displaced/deformed components to provide timely status reports to facilities developers. In addition, I was tasked with improving SKUR's WebGL/three.js rendering system.

Here are some details on the computer vision work I did at SKUR.

SKUR: Automated 3D Alignment

I used FFTs, log-polar transforms, and feature-matching to align CAD vector models with lidar point clouds having unknown translation, scale, and lateral orientation (only 'up' was known).

Here is a list of my computer vision patents and other innovations.

Patents And Other Innovations

If you are interested, here are some notes on this portfolio.

Portfolio

Browser Support

In order of compatibility: Chrome, Edge, Safari, Firefox.

How I Built This